Subscribe to Gorilla Grants

We regularly run grants to help researchers and lecturers get their projects off the ground. Sign up to get notified when new grants become available

How Game Elements Promote Recruitment, Engagement and Learning

In the last decade, gamification has been increasingly employed as a tool to promote recruitment, engagement, and learning in a range of fields (i.e., marketing, education, health and productivity tools, and fundamental research). Here, we review the concept of gamification in behavioral science research, outline some of the different ways it is used, and discuss examples where gamification has been employed to great effect in online research.

The gamification trend is believed to have started around 2010; however, the concept of embedding tasks within a game to increase learning and motivation has been around for hundreds of years (Zichermann & Cunningham, 2011). A game is a system in which players engage in a fictional challenge, defined by rules, interactivity, and feedback, which results in a quantifiable outcome often provoking an emotional reaction (Kankanhalli et al., 2012). Given this definition, we can define gamification as the use of these game elements and techniques in non-game contexts (Deterding et al., 2011; Kankanhalli et al., 2012).

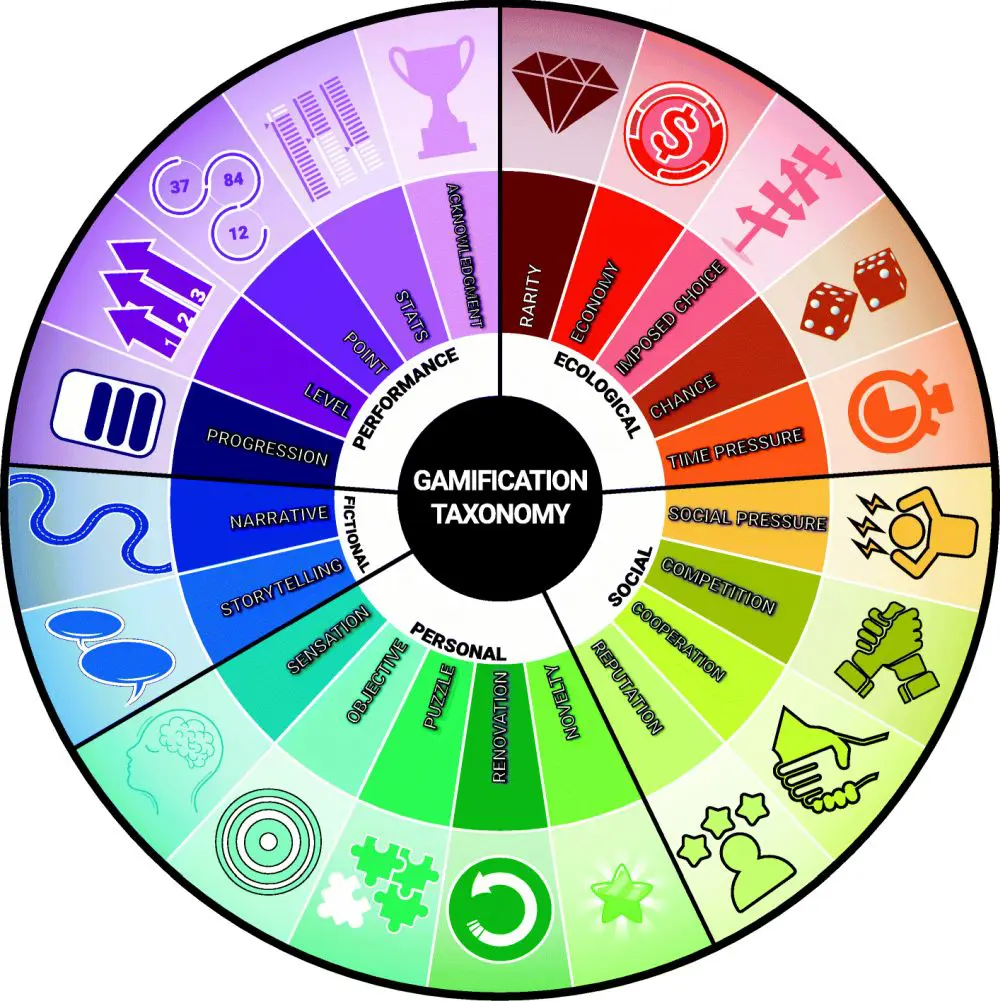

There are many potential ways to gamify a behavioral science task, each of which elicits differing levels of motivation and requires different levels of input from the experimenter. In order to help researchers decide which game elements are more appropriate in a particular context, Toda et al. (2019) eloquently summarize the different approaches to gamification, using a clear taxonomy of different game elements (e.g., points, badges, leader boards, etc.). The authors also suggest a distinction between extrinsic and intrinsic game elements. An extrinsic game element can be perceived clearly and objectively by the user, whereas an intrinsic element is presented more subtly so that the user is unaware of perceiving it (Toda et al., 2019).

In their taxonomy, Toda et al. (2019) described 21 game elements and their synonyms. These elements were validated by two surveys with experts in the field of gamification in education. This resulted in a taxonomy of five gamification dimensions: Performance/measurement, Social, Ecological, Personal, and Fictional (see the image below). Whilst this taxonomy is highly useful for anyone wishing to develop a gamified task, it is worth remembering that the taxonomy proposed by Toda et al. (2019) was based solely on expert opinion, not on actual users.

Performance/measurement elements are the most commonly used in gamification. They include rewarding performance by using points, levels, and achievements/badges. There are hundreds of examples where performance measurements have been used to turn different tasks into games, such as Datacamp (gamifying computer programming education), Habitica (gamifying habit forming), and Peloton (gamifying fitness), to name but a few. All of these examples use extrinsic features to motivate participants and provide feedback.

Another common feature in gamification is to compare learning and performance with other users, i.e., the social dimension. The most common way to do this is through leader boards, either making users work hard to catch up with friends or work hard to stay on top and maintain their reputation. Depending on the tasks, there are plenty of opportunities for social interaction either via competition or cooperation. Towards the end of this article, we will describe the HIVE, a fun and powerful game for exploring conformity and diversity across multiple individuals.

The ecological dimension relates to the environment implemented in gamification. This dimension includes elements such as chance (manipulating the probability of winning or the size of the prize), time pressure, or rarity of prizes (e.g., availability certain Pokémon). Decades of research in the fields of neuroeconomics and value-based decision-making have provided robust neurocomputational models that describe these behaviors (Rangel et al., 2008). Crucially, the emerging field of computational psychiatry is linking these mechanisms to psychological and psychiatric phenotypes (Montague et al., 2012). Therefore, gamification could become a useful tool for clinical diagnostics and treatment. In the case studies section, we will discuss FunMaths, which has used gamification to help children that struggle with arithmetic and understanding number relations.

The personal dimension is related to the user of the environment; for example, is the game repetitive, or does it stay fresh and novel? Is it simple, or do the tasks challenge the user? Are there pleasing sensations for the user, such as vibrant colors or sounds, to improve their experience? These elements are implicitly rewarding to the user.

Lastly, the fictional dimension aims to link the user experience with context through narrative and storytelling elements. In these games, the user is unaware they are learning a skill or performing a task. A number of educational board games have been developed over the years, using the fictional dimension (for example playing CBT), and more recently, apps are being developed to extract behaviors through naturalistic game play (Sea Quest Hero) and even treat behavioral conditions like ADHD (Neuroracer). We will later discuss Treasure Collector, a game for children that takes a basic psychological paradigm and turns it into an exciting adventure!

In normal settings, experimental tasks and questionnaires may be tedious for participants. They often use simplistic stimuli, are repetitive, and provide no feedback on personal performance or the performance of others (scoring poorly on four of the five gamification dimensions mentioned above). In any experiment or survey, participants’ attention gets lower over time, thereby increasing the error rate. This is amplified in remote and online testing, where there are likely to be any number of distractions that are not present in the lab. When the experimenter is not present, participants are more likely to drop out if they become bored—increasingly so when experiments require more than one session (Palan & Schitter, 2018). It has been suggested that engaging and rewarding participants through gamification can help solve these problems and therefore increase data quality by increasing attention and motivation.

Bailey et al. (2015) investigated the impact of gamification on survey responses. Here, the authors refer to ‘soft gamification’, where traditional survey responses were replaced with more interesting tools like dragging and selecting images. We consider this an increase in the personal dimension in Toda et al.’s (2019) taxonomy. Bailey et al. (2015) found that gamification led to richer responses (a significant increase in the numbers of words used in responding) and participants were engaged for longer. This is just one of many examples that show the benefits of gamifying research.

Looysten et al. (2017) conducted a systematic review of online studies, employing gamification to investigate the effects of game-based environments on online research. To do so, they looked at different measures of engagement, e.g., the amount of time spent on the program and numbers of visits. Taken together, their results suggest that gamification increases engagement in online programs and enhances other outcomes, such as learning and health behavior. However, the authors also suggest that the impact of gamification features reduces over time as the novelty of points, levelling up, and badges wears off.

Looyestyn et al. (2017) cite the example of the gamified app Four-square, which was hugely successful upon release but failed to retain users after 6–12 months. I am sure we can all think of other examples of websites and apps that were hugely popular at first but failed to retain customers. This suggests that only utilizing performance/measurement elements in gamification will lead to initial spikes but fail to retain customers/participants. This may not be a problem for most behavioral science experiments where long-term retention is not required; however, we suggest it is worth considering when developing longitudinal and multi-session games.

The systematic review by Looyestyn et al. (2017) provides the strongest evidence to date that gamification significantly increases participant engagement. However, it is also worth highlighting some limitations. First, the positive effect of gamification was not found for all measures of engagement, which sheds some doubt on the generalizability of these results. Second, although they began with 1,017 online studies, only 15 studies remained for analysis after the exclusion criteria were applied. This small sample size, and large heterogeneity in terms of population, methods, and outcomes, meant these studies were not directly comparable, and thus it was not possible to conduct a meta-analysis.

To overcome this limitation, future research should provide more standardized testing, measures, and analysis methods in online research. There is some promising work in this direction. In a recent study, Chierchia et al. (2019) provided a battery of novel ability tests to investigate non-verbal abstract reasoning. The battery was validated on adolescents and adults who performed matrix reasoning by identifying relationships between shapes. While non-verbal ability tests are usually protected by copyright, Chierchia et al. (2019) made their battery open access for academic research.

Dyscalculia is a developmental condition that affects the ability to acquire arithmetical skills, i.e., dyslexia for numbers. Individuals with dyscalculia lack an intuitive grasp of numbers and their relations. Reports suggest that around 5–7% of children may have developmental dyscalculia (similar prevalence to developmental dyslexia), and it is estimated that low numeracy skills cost the UK £2.4billion annually (Butterworth et al., 2011). Butterworth et al. (2011) further propose that bringing the lowest 19.4% of Americans to the minimum level of numeracy would lead to a 0.74% increase in GDP growth.

There are clear economic and social benefits to improving arithmetical skills in the general population. Professor Diana Laurillard (Professor of Learning with Digital Technologies at UCL Institute of Education) developed a series of math games to train math skills in dyscalculic children through simple manipulations of objects.

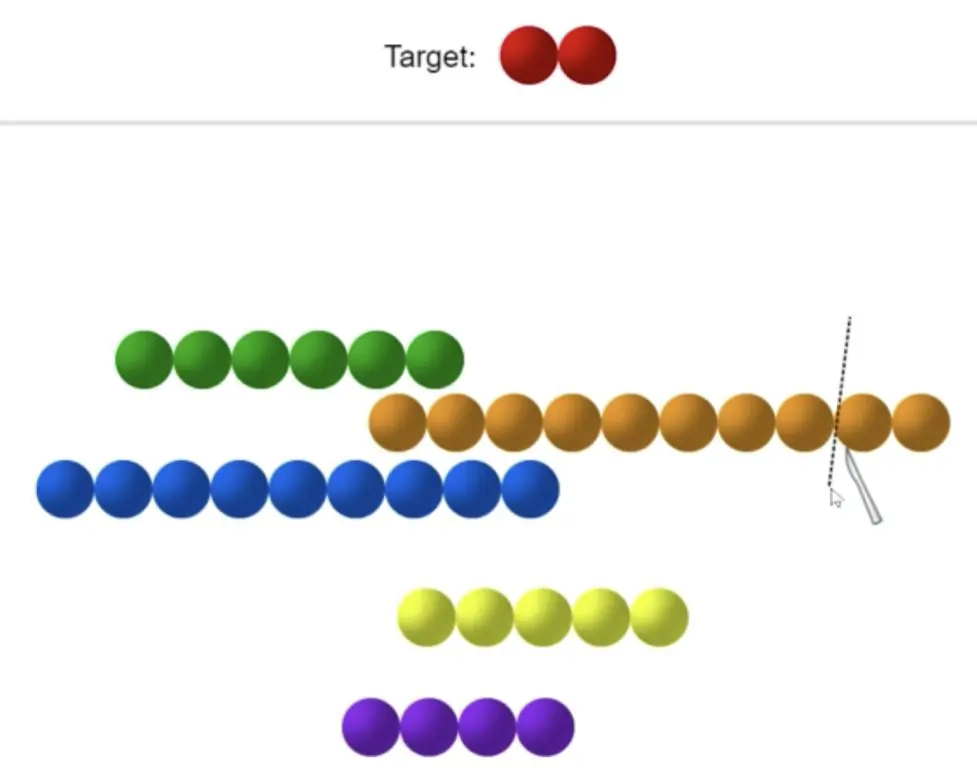

In one of the games (NumberBeads), children learn about addition, subtraction, and the number line by combining and segmenting strings of beads. The image below shows an example where the target is a chain of two beads. In this instance, a knife is being used to slice the larger chain of beads into chains of two beads. Participants can combine and cut these up as they wish, and when this is done correctly the chain disappears in a pleasing puff of success!

The game continually adapts to the player’s ability, building up their knowledge of the number line, fluency of the number line, and understanding of numerals (utilizing performance and personal gamification dimensions). In an interview, Prof. Laurillard calls it a “constructionist” game, as children “are actually constructing the game themselves.” She also suggests that similar games could be developed to train language skills in people with dyslexia. You can read the full interview here.

Games such as these provide a high-quality educational resource at a very low cost. According to Prof. Laurillard, these games have tremendous value, because they provide individualised and enjoyable mathematics tuition to students both with and without dyscalculia. One player said, “I’d play it all day,” while a teacher said “I was absolutely astounded by the work they were doing with this. They were clearly seeing things in a different way.” Students clearly enjoy playing these games, which continually stretch and extend them (personal dimension of gamification taxonomy).

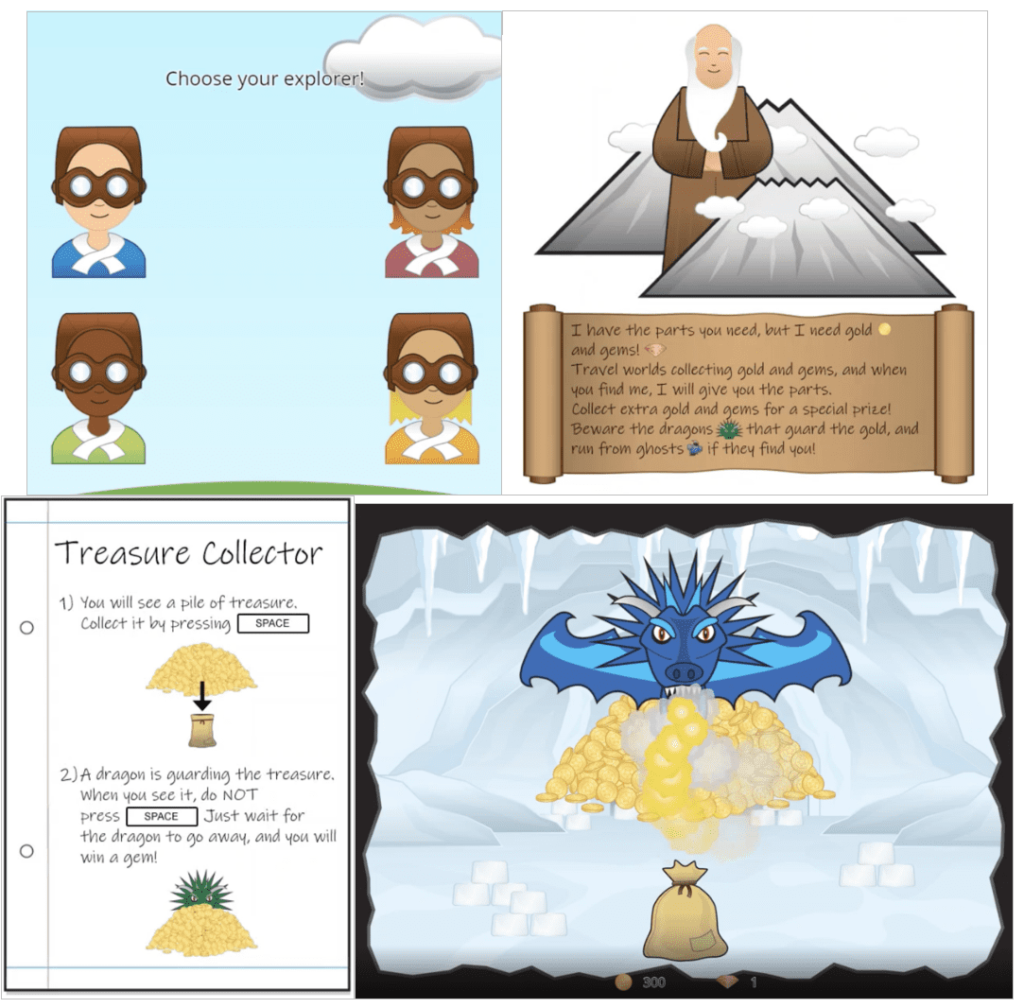

We previously mentioned the challenges of attrition for online longitudinal research (Palan & Schitter, 2018). Professor Nikolaus Steinbeis, based at UCL, wanted children (7–10yrs) to train 10 minutes a day for 8 weeks, in order to improve executive function. Such a task would have been impossible without gamification.

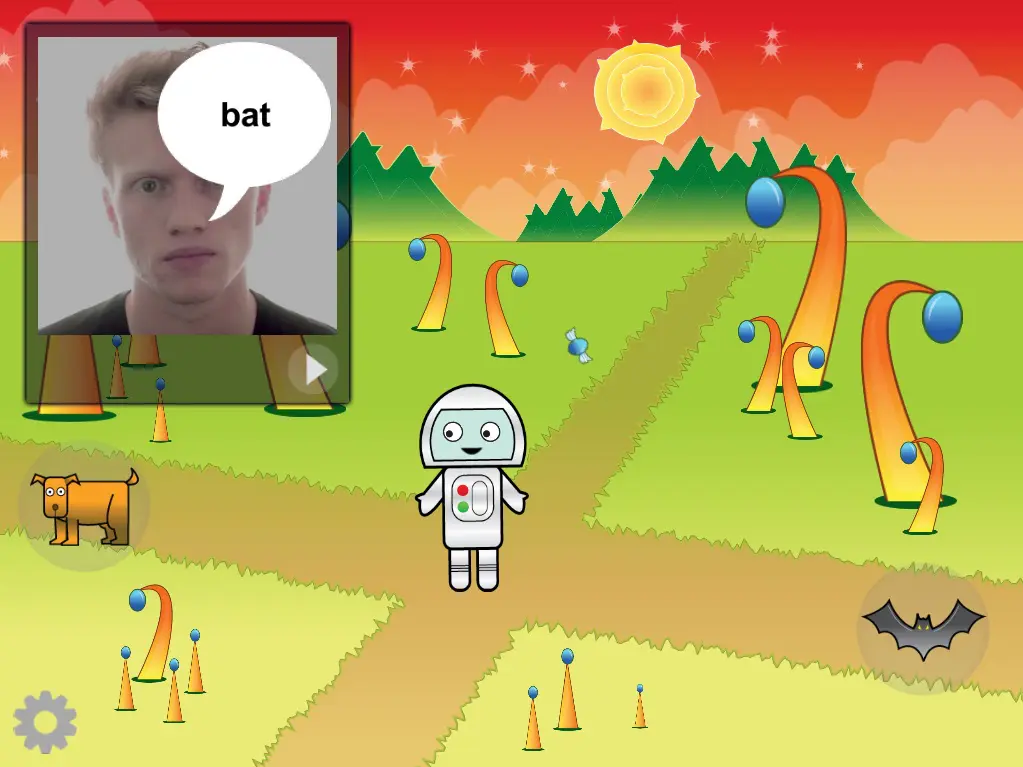

Children would be training on the Go/No-Go task, which tests attention and response inhibition by asking participants to respond to certain stimuli as fast as possible (Go trials) versus withholding a response to other stimuli (No-Go trials) (see an example here). To keep participants engaged, the classic Go/No-Go task was embedded into a larger narrative of being an explorer (an example of the fictional dimension of gamification).

The image below shows how participants chose their own avatar, which was integrated into the story. The Go/No-Go task was then reskinned in a variety of situations, including when to dig for treasure, or when to steal gold from a dragon (shown below), or when to drive straight or swerve to avoid ice on the road. The narrative elements and varied gameplay increased compliance and helped deliver quality adaptive training for the research project.

According to Prof Bishop, while you can typically get an adult to do around 100 trials of a boring adaptive task, with kids, after three or four trials, they’ll say, “Is there much more of this?”. This is bad news if you want them to train every day for 8 weeks! And yet, with the Treasure Collector Games, Prof Steinbeis had students train for 10 minutes on the game, four times a week for 8 weeks. Overall, participants completed around 4,000 trials in total — and reported that they still enjoyed the game. It is clear that without gamification, this study would have been impossible, and so by employing gamification, a range of developmental research questions become possible.

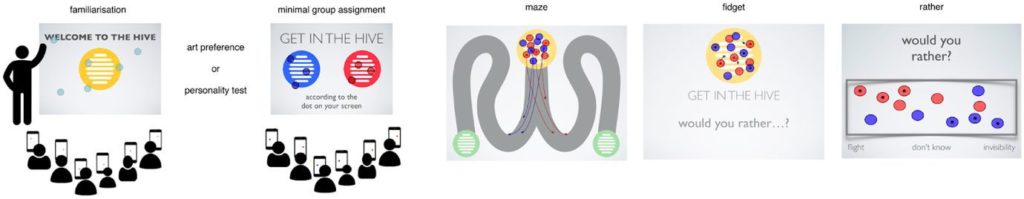

The Hive (developed with Professor Daniel Richardson at UCL) is a research platform for studying how people think, feel, and behave together in groups (Bazazi et al., 2019; Neville et al., 2020). It works as an app that people can access with their smartphone. After logging in, the Hive environment displays a dot that can be dragged around. The coordinate of each dot is recorded, thus allowing experimenters to analyze trajectories and rest periods in a similar way to experiments utilizing eye or mouse tracking. Each participant sees their own dot, and other participants’, moving on the central display. Then, they perform different tasks while monitoring other individuals’ decisions, represented by the movements of the other dots, as shown below.

One of the studies involving the Hive investigates the link between mimicry and self-categorization, and it attempts to answer the following question: Do we always do what others do and, if not, what are the factors that influence our decisions in a group? (Neville et al., 2020). The experiment has been conducted at multiple public events such as the Science Museum in London with groups between four and 12 people. Participants are assigned to two groups i.e., the red and the blue dot. Then, they play a series of games involving moving their dots and looking at the choices of the other participants (belonging to both their own group and to the other one).

Overall, the results show that participants are influenced by the movements of the confederate dots that are the same colors as their own. The authors conclude that mimicry is affected by in-group/out-group knowledge, i.e., knowledge of whether people belong to the same category as us. The Hive project allows one to study a fundamental question, namely: Do people take decisions differently when they think as individuals or as a crowd? Instead of paying participants for performing a long and boring experiment in a lab, the Hive allows researchers to investigate this issue everywhere, using people’s smartphones, and without any additional costs whilst maintaining the precision of lab-based testing.

Our literature review suggests that gamification is effective at increasing participant engagement and retention, and even increasing data quality in both qualitative and quantitative experiments. However, Bailey et al. (2015) reported a concern that applying gaming mechanics to questions can change the character of the answers and lead to qualitatively different responses. Therefore, to what extent does gamification change behavior?

A common concern for researchers is whether gamification will fundamentally change the outcomes of task behavior or surveys being administered. Will gamifying my research mean the findings are no longer valid? We can boil these questions down to ‘do external rewards and motivators change behavior?’ The answer to this final question is certainly ‘yes’.

A fascinating series of studies by Manohar et al. (2015) investigated the effect of extrinsic reward on the speed-accuracy trade-off, which is supposed to be a fundamental law—as we move faster, we become less accurate. However, monetary incentives break this law, and participants became both faster AND more accurate. This is just one of many examples showing that extrinsic reinforcers change behavior. That said, external reinforcers are often present in traditional lab-based behavioral economics studies. Total score bars are commonplace in value-based decision-making studies, yet researchers in the field still argue about the extent to which this biases behavior in line with assumptions of prospect theory (Kahneman & Tversky, 1979).

Ryan and Deci (2000) distinguish between two different forms of motivation: Intrinsic and extrinsic. Intrinsic motivation relates to the individual’s satisfaction in performing an activity in and of itself, while extrinsic motivation occurs when the activity is performed to obtain another and tangible outcome, e.g., money as a reward. This dichotomy is mirrored by the implicit/explicit dimensions of gamification noted in Toda et al.’s (2019) taxonomy. It is therefore likely that employing implicit vs explicit dimensions of gamification will affect intrinsic or extrinsic motivation in unique ways.

Mekler et al. (2017) investigated the effects of individual game elements on intrinsic and extrinsic motivation in an image annotation task. Mekler et al. (2017) found that gamification significantly improved extrinsic factors like performance, especially when using leader boards and points, but not intrinsic motivation or competence (the perceived extent of one’s own actions as the cause of desired consequences in one’s environment). This point was also raised by Looyestyn et al. (2017), who noted that the positive effects of gamification seemed to lessen over time: The performance/measurement dimension of gamification is only effective in the short run.

Looyestyn et al. (2017) suggest that in order to be successful in the long term, gamified applications should focus on intrinsic, instead of extrinsic, motivation, i.e., focus on the personal and fictional dimensions of gamification. For future applications, it is crucial to design game environments that enhance users’ intrinsic motivation to keep them engaged over time, potentially moving more towards games instead of gamification (see below).

Lastly, we wish to suggest the possibility that differences between traditional tasks and games may not be such a bad thing for gamified research. In these situations, we mostly consider lab-based testing to be the ‘ground-truth’ in psychology and behavioral science. However, lab conditions and tasks can actually be quite artificial. Psychological tasks are often reduced to their most basic elements so that scientists can make accurate inferences about the factors that influence behavior. However, it is often the case that lab-based findings are not effective at predicting behaviors outside the lab (Kingstone et al., 2008; Shamay-Tsoory & Mendelsohn, 2019).

Therefore, even if you do find different results between paradigms run in the lab and gamified versions of tasks, that does not mean that your game-based findings are inherently wrong or less valid. We do not yet know which of these is closer to the ‘ground truth’. It may be that games, which are often more natural and more intrinsically motivating, are in fact more relevant to real-world decision-making.

There is a subtle, but important, distinction to be made between using gamification and games in research. Whilst gamification refers to adding game elements to existing tasks, it is also possible to create research games instead of gamifying existing research paradigms. Research games will be intrinsically motivating (and thus, hopefully, maintain engagement over time) and allow for the exploration of more naturalistic behaviors.

Typically, the objective of gamification is to increase motivation and engagement. This is often achieved by using extrinsic motivators such as points, badges, and leader boards (i.e., the performance/measurement dimension), but what is the point of points? We can imagine gamifying reading by stating that each page is a point, thus motivating someone to read more each day, as it is worth more points. Helpfully, books already have points printed on each page (the page numbers) so you have a running total score, but that’s probably not what motives any of us to read.

This approach to gamification ignores the fact that the book itself is intrinsically motivating, or to put it another way, a good book doesn’t need gamifying. The objective of a game is pleasure or to learn a new skill, and therefore the motivation to play it is often intrinsic (i.e., the personal and fictional dimensions). This intrinsic/extrinsic distinction in motivation changes the way behaviors are learned and reinforced.

Most research tasks are designed to test a very specific question, and as such they will only have a limited number of response options that can easily be categorized as correct or incorrect. However, games typically have a larger range of responses, which can lead to improvisation. They also offer the player the opportunity to explore a world, and learning is often implicit and directed by the player rather than by the experimenter. Compared to gamification, games often employ a more constructionist approach that leads to discovery learning.

The FunMaths game is one such example, as participants can achieve their goals in several different ways and are given the opportunity to explore different options. This is different to Treasure Collector, which uses game elements such as a story narrative to increase motivation and engagement for a single, simple task (Go/No-Go task). Thus, one can argue that Treasure Collector is an example of gamification of the Go/No-Go task, whereas FunMaths is an educational game designed to improve learning. However, when gamification is done well, it should be near impossible to distinguish it from a game.

When using games to investigate naturalistic behaviors, researchers must contend with a wider array of behaviors—statistically, we could refer to this as a larger parameter space. Each decision is not made in isolation, and choices are likely to interact with one another, creating large, multi-factorial designs. Rich datasets like this are perfect for machine learning algorithms, which can help identify which combinations of behaviors best predict outcomes. However, generating meaningful inferences from potentially enormous matrices of behavior combinations requires an even larger number of datapoints, i.e., lots of participants.

With traditional lab-based or online testing this would increase participant costs hugely. However, we have already highlighted that games can be intrinsically motivating and genuinely enjoyable, thus significantly reducing participants’ fees (maybe even removing them all together). For each experiment, there will be a breakeven point where, if you want more than a certain number of participants, it becomes cheaper to invest in developing an exciting game compared to using traditional behavioral science paradigms and paying participants for their time.

In the case of Sea Hero Quest, they are reported to have recorded data from 4.3 million players, who have played for a total of over 117 years. Collecting 117 years’ worth of data via a recruitment service such as Prolific (117 participants, 525,600 minutes each at £7.50 per hour) would cost over £10.7 million. As tools for making games become cheaper and more accessible, and the need for larger samples gets stronger (i.e., reproducibility), games are going to be an important aspect of scaling up experimental, social, behavioral, and economic research.

Conclusions Herein, we have reviewed the role of gamification in behavioral science. We have endeavored to define gamification and outline the different elements that can be considered when creating behavioral science games. We have also provided examples of three different behavioral science games (videos of these games and more can be found here). We propose that gamification will increase engagement and retention in online behavioral science studies. However, one must consider whether this will in some way affect the data being collected. Anecdotal evidence from researchers and participants suggests that the benefits of employing gamification and game-based learning far outweigh these concerns.

First published in the BEGuide 2021, which can be accessed here.

We regularly run grants to help researchers and lecturers get their projects off the ground. Sign up to get notified when new grants become available